Still, as long as traditional forms of journal and book publishing with prestigious legacy publishers serves as the highest value currency of academia (see e.g. Moore 2019, Eve 2020), neither the creation of virtual workspaces, which, as we can see, became indispensable enablers of modern science, nor the digital scholarly objects produced in them are rewarded as genuine scholarly outputs. In this paper, I aim to provide a small contribution to the pressing re-harmonization efforts of research evaluation and novel research practices in textual sciences and initiate an open discussion towards a community consensus over what matters in the evaluation of VREs. Candela, Castelli, and Pagano’s now canonical study on the five core criteria of VREs (Candela, Castelli, and Pagano 2013) will serve as a starting point for the discussion which will be structured around the following questions:

- Why is critical engagement with VREs key in their formal recognition, also in terms of tenure and promotion?

- What are the mechanisms for the integration of VREs into formal (and conventional) systems of research assessment so that scholars can receive proper credit for them?

- What are the emerging future perspectives that will shape the critical assessment of VREs and how it differs from that of publication of papers, books?

- Is peer review the most appropriate evaluation framework for them?

The paper presents findings emerging from a specific task force of the OPERAS-P project [1] “Quality assessment of SSH research: innovations and challenges.” The task force aims to support the development of the relevant OPERAS [2] activities and services by informing them about current trends, gaps and community needs in research evaluation in the Social Sciences and Humanities. This entails (1) teasing out the underlying reasons behind the persistence of certain proxies in the system (such as the ‘impact factors of the mind’ that continue to assign tacit prestige to certain publishers and forms of scholarship) and (2) the analysis of emerging trends and future innovation in peer review activities within the Humanities domain. The methodology includes desk research, case studies, and conducting in-depth interviews with 43 scholars about challenges, incentives, community practices and innovations in scholarly writing and peer review. In addition to the case studies and interviews, feedback on the topic from scholarly events, such as VREs and Ancient Manuscripts Conference 2020 (Clivaz and Allen 2021), has also been integrated into our work.

Realigning research evaluation with research realities of digital scholarship

Emerging frameworks for the critical assessment of VREs

Another crucial step towards taking the evaluation of VREs to the next level is to define them and position them within the generic discourse of tool criticism by enabling a clear distinction of what is a VRE and what is not. To this end, Candela, Castelli, and Pagano’s now seminal 2013 study (Candela, Castelli, and Pagano 2013) defines five core criteria of VREs:

- It is a web-based working environment;

- It is tailored to serve the needs of a community of practice;

- It is expected to provide a community of practice with the whole array of commodities needed to accomplish the community’s goal(s);

- It is open and flexible with respect to the overall service offering and lifetime;

- It promotes fine-grained controlled sharing of both intermediate and final research results by guaranteeing ownership, provenance and attribution.

Their attempt to define VREs inevitably signal expectations towards their optimal design and implementation and therefore can be read as a set of preliminary measures that guides their evaluation. As such, we see a remarkable shift from the paper-centric evaluation criteria towards taking into account the specificities of digital scholarship. First, the situatedness and non-neutrality of VREs is clearly signalled. That is, the quality of a VRE is not an absolute value but essentially depends on the context and purpose of the environment and on the research questions that gave rise to it. Closely related to this, we see a strong emphasis on the defining role of community aspects, and how VREs are primarily designed to serve certain communities and their scholarly needs of different kinds (e.g. access to primary sources, data discovery, processing, analysis, enrichment, visualization and publication; exchanges with peers etc.). The extent to which a VRE remains sensible and flexible to community needs of different kinds became a widely recognized, recurrent key quality measure in later approaches as well. The following excerpt from a discussion in the context of the DESIR project, a project that is primarily dedicated to connect infrastructures and knowledge bases for Digital Humanists across Europe, exemplifies this well:

Next, establishing publication venues specifically dedicated to tools and environments in Digital Humanities and Cultural Heritage studies is certainly a big step in making them more visible and an integral part of the scholarly discourse. In this respect, the launch of the first such journal, RIDE (Review Journal of the Institute for Documentology and Scholarly Editing), in Germany in 2014 clearly marks a new era. Since then, RIDE has been providing an increasingly renowned platform for experts to evaluate and discuss current practices, tools and environments. The novelty of the journal’s approach to tool criticism is to evaluate not only traditional aspects of scholarship that we saw in the first peer review attempts but also the methodology and technical implications. To this end, they have detailed guidelines/system of criteria in place for reviewing scholarly digital editions and tools that reflect the complexity of digital tools and environments but are still generic/high-level enough to be applicable to a wide range of artefacts. (‘Criteria for Reviewing Digital Editions and Resources – RIDE’ n.d.)

The shortage of evaluative labour: Is peer review the most appropriate evaluation mechanism for the quality assessment of VREs?

The deep embeddedness of peer review into the traditional paradigm of the research article, persistently serving as the highest value currency of academia, is not the only one major difficulty that tool makers face in their struggle to gain formal recognition to born-digital scholarly objects as genuine scholarly outputs. The shortage of evaluative capacities is another, at least just as crucial factor that prevents the large-scale extension of peer review to tools, environments, codes, and scholarly data of different kinds. As the amount of scholarly publications are still increasing year by year (see e.g. Bornmann and Mutz 2015)—not independently from the publish or perish pressure on scholars (Plume and van Weijen 2014) that has a significant role in the conservation of traditional proxies of research excellence—scholarly publication venues are becoming more and more challenged in their capacity to find and attract reviewers, especially for highly specialized, niche topics. In the light of these difficulties the traditional peer review mechanisms are already facing, their large-scale extension to a wide range of novel digital scholarly objects seems to be unrealistic, especially considering that the proper evaluation of these complex scholarly outputs require very specific knowledge, usually coming from the intersection of different knowledge areas: a specific Humanities discipline, Information Technology, Data science or Infrastructure engineering. These difficulties around integrating digital scholarly artefacts into conventional research assessment mechanisms are clearly reflected in the following interview statement of a Digital Humanities scholar:

On the other hand, the critical engagement with research tools and environments is not limited to peer review. In the last couple of years, especially on the European research policy horizon, we see the emergence of certification and evaluation frameworks that do not directly originate from the scholarly discourse. These frameworks focus more on the infrastructural maturity of services such clearly defined access protocols, as long-term availability, the interoperability of standards to give an idea to science funders about the sustainability and the reliability of the many services with minimum administrative effort. An important consideration behind them is to set a quality threshold for the agile production of digital scholarly artefacts that are connectible to each other instead of creating knowledge silos that can only be used in the context of one single project for a short period in time.

FAIR play? The central role of disciplinary cultures in the successful implementation of the FAIR frameworks

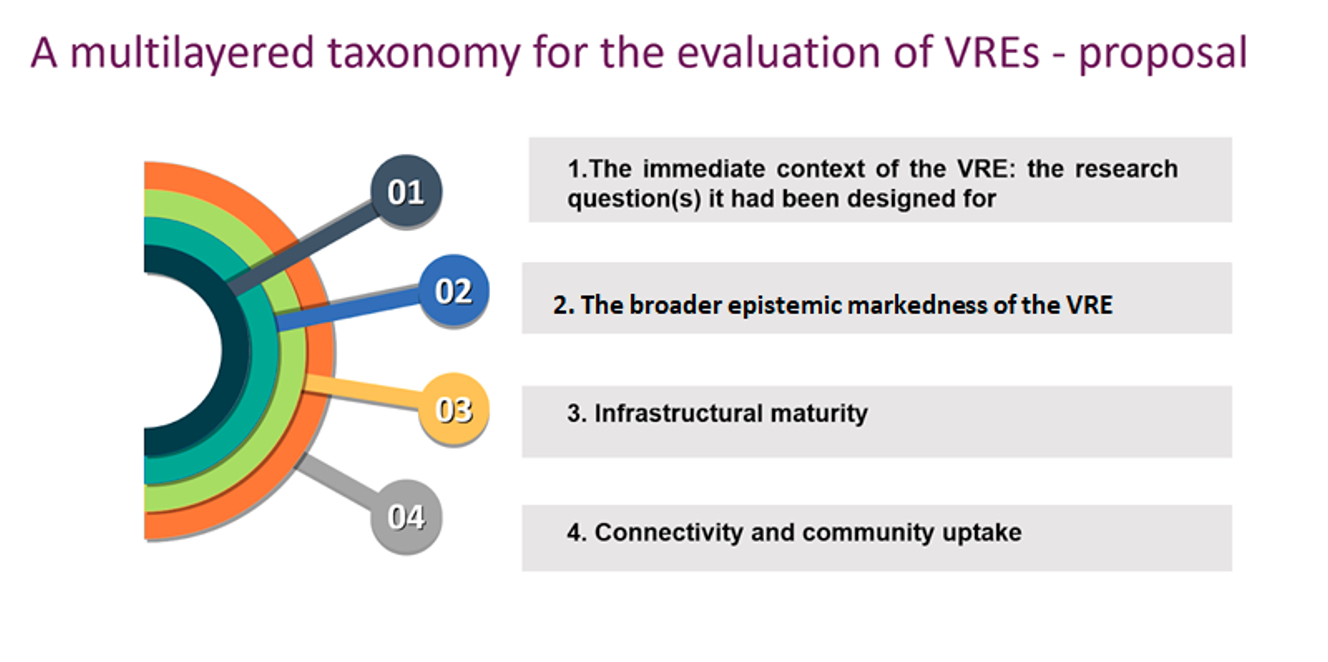

The complexity of evaluation of this kind is also reflected in the fact that in the context of VREs, a FAIR assessment can manifest itself in two levels:

- Since the design of VREs define the quality of the outputs made in them, and in many cases also the access to them and to their primary sources, the first level assessment could focus on how VREs enable FAIR data collection, creation, curation and publication (e.g. whether provenance and copyright information is kept clear, whether the data model and data standards applied are in line with community standards, clearly defined access conditions to primary, interim and secondary resources etc.).

- The second level could assess the VRE as a complex, digital scholarly object itself (e.g. its connectivity to data repositories, maturity of operation, clear licensing etc.).

It is yet to be seen whether and to what extent communities around VREs will realize FAIR or certification systems as alternative evaluation frameworks that might complement traditional peer review in the future. If becoming translated, adopted and deeply embedded in research realities, these emerging alternative assessment frameworks carry the potential to address some of the crises peer review is facing in the digital realm and to put novel mechanisms in place that allow for the recognition of digital scholarly objects on their own terms, without the paper-centric legacy of traditional peer review. While FAIR and other certification frameworks primary aim is to assess the technical and legal maturity of scholarly objects and environments as parts of our digital commonwealth, it is still the peer review practices of different kinds that anchor them in scholarship (looking at the epistemic markedness of them or the arguments they convey etc.) and therefore the two types of assessment show complementarity.

Conclusion and a proposal for a synthesis